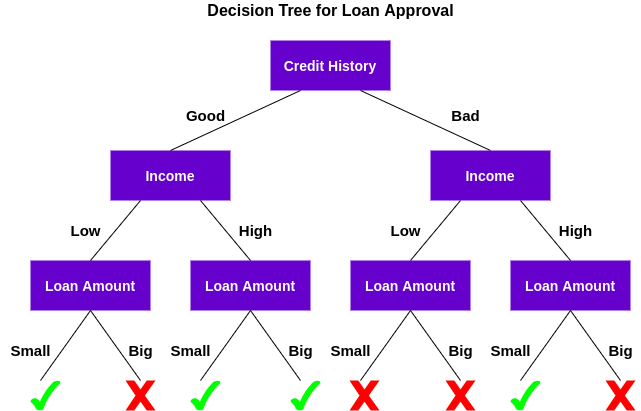

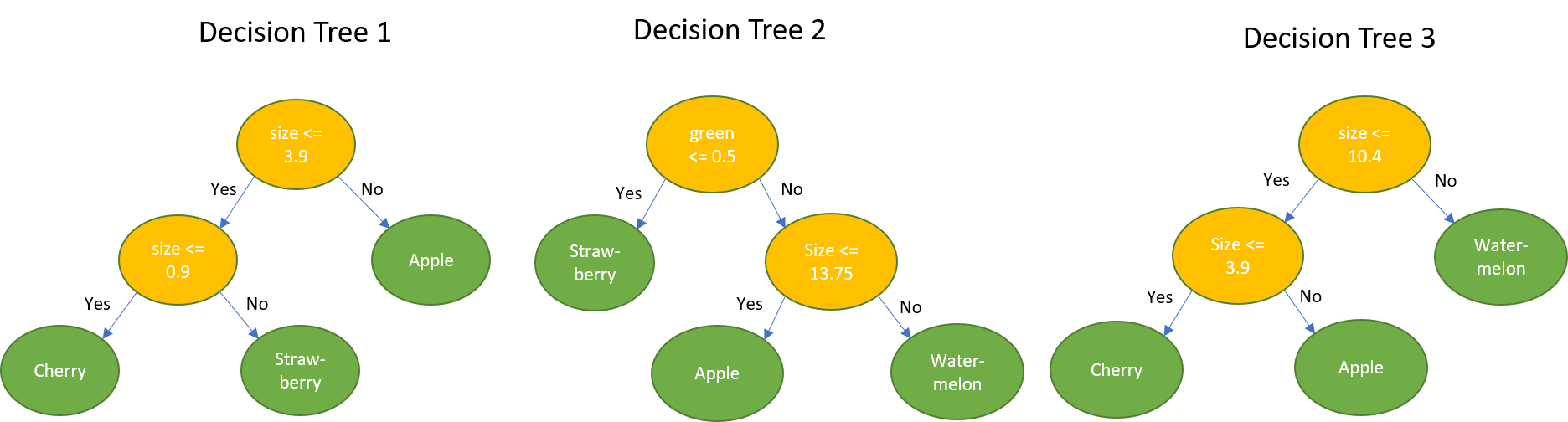

Decision Tree Random Forest Regression | Learn big data, ai, cloud for free with project based video tutorials. Decision trees are a type of model used for both classification and regression. Learns a random forest* (an ensemble of decision trees) for regression. Also, it works fine when the data mostly contain. As mentioned previously, random forests use many decision trees to give you the right predictions.

Random forest builds multiple decision trees and merges them together to get a more accurate and stable prediction. One way to find the optimal number of estimators is by using gridsearchcv , also from sklearn. Random forest is similar to decision trees, in that it builds a similar tree to a decision tree, but just based on different rules. Build projects from scratch with step by step instructions. Also, it works fine when the data mostly contain.

In this blog, we are comparing random forest and decision tree. For regression, we will be dealing with data which contains salaries of employees based on their position. We will use the randomforest function from the randomforest package You can find the module in studio (classic) under machine learning, initialize model for number of random splits per node, type the number of splits to use when building each node of the tree. Learns a random forest* (an ensemble of decision trees) for regression. Random forests are an example of an ensemble learner built on decision trees. Rpart(formula = carsales ~ age + gender + miles + debt + income, data = inputdata, method a random forest allows us to determine the most important predictors across the explanatory variables by generating many decision trees and then. Implementing random forest regression in python. Classification and regression trees or cart for short is a term introduced by leo breiman to refer to decision tree algorithms. It reduces the variance of the individual decision trees by randomly. Used for both classification and regression. Decision trees are the basic building blocks for a random forest. For our example, we will be using the salary.

I want to know under what conditions should one choose a linear regression or decision tree regression or random forest regression? Random forest consists of a committee of decision trees (also known as classification trees or cart regression trees for solving tasks of the same name). Learns a random forest* (an ensemble of decision trees) for regression. In the previous section we considered random forests within the context of classification. It is an ensemble of randomized random forest is a ensemble bagging algorithm to achieve low prediction error.

Below is some initial code. In the previous section we considered random forests within the context of classification. For our example, we will be using the salary. Also, it works fine when the data mostly contain. A split means that features in each level. Classification and regression trees or cart for short is a term introduced by leo breiman to refer to decision tree algorithms. Random forest is the most simple and widely used algorithm. Random forest works well when we are trying to avoid overfitting from building a decision tree. Rpart(formula = carsales ~ age + gender + miles + debt + income, data = inputdata, method a random forest allows us to determine the most important predictors across the explanatory variables by generating many decision trees and then. There's a common belief that due to the presence of many in regression, an average is taken over all the outputs and is considered as the final result. As mentioned previously, random forests use many decision trees to give you the right predictions. You can find the module in studio (classic) under machine learning, initialize model for number of random splits per node, type the number of splits to use when building each node of the tree. In this blog, we are comparing random forest and decision tree.

Each individual tree is a fairly simple model that has branches. In this repo, i explore the differences between decision tree regression ml models and random forest regression ml models! I want to know under what conditions should one choose a linear regression or decision tree regression or random forest regression? Below is some initial code. A split means that features in each level.

Random forest is the most simple and widely used algorithm. How this is done is through r using 2/3 of the data set to develop decision tree. For our example, we will be using the salary. There's a common belief that due to the presence of many in regression, an average is taken over all the outputs and is considered as the final result. In this repo, i explore the differences between decision tree regression ml models and random forest regression ml models! Our goal here is to build a team of decision trees, each making a prediction about the dependent variable and the ultimate prediction of random forest is average of predictions of all trees. Random forests are an example of an ensemble learner built on decision trees. Are there any specific characteristics of the data that would make the decision to go towards a specific algorithm amongst the tree mentioned above? Implementing random forest regression in python. This tutorial builds on the linear and logistic regression for to do this, will use fairly familiar code, but from a different package. Random forest is similar to decision trees, in that it builds a similar tree to a decision tree, but just based on different rules. Below is some initial code. In the previous section we considered random forests within the context of classification.

It is an ensemble of randomized random forest is a ensemble bagging algorithm to achieve low prediction error random forest regression tree. Also, it works fine when the data mostly contain.

Decision Tree Random Forest Regression: Random forests are an example of an ensemble learner built on decision trees.

0 komentar:

Posting Komentar